Claude and Independently Verifiable Steps

How I improved my prompting and upgraded ~7 years of frontend dependencies of this blog using Claude

The prompt progression

Earlier this year I started using AI coding tools. It's a learning curve.

When I first started, it was tempting to give my agent very little description, "write tests" or "upgrade frontend dependencies", and let it do its thing.

Only to realize it's like asking a wedding DJ to play something with a good beat, to be met with 7 minutes of avant-garde German industrial techno. Sure, the beat is impeccable by technical means, but now the grandma is crying and catering staff look concerned.

System prompts

So I started working on system prompts. One prompt for mocks, another for tests, one for fun (Your responses must be in the style of Matt Levine, the Bloomberg author), another one for project description and general coding style...

And the results slowly get much better. A good system prompt is a big improvement, it guides your intern towards best practices, though it lacks specificity.

We've now asked DJ to play something with a good beat that people can dance to. DJ listened and started playing hardstyle techno. People do dance to this, but it's not 4am in Berghain and people don't bring ecstasy to a wedding.

Agents

On top of that I have specific "agent" prompts saved that I sometimes copy paste before the actual request. In Claude code this can be done easily using /agents, but in VSCode (that I use at work) I just copy paste them like a caveman.

One is a code architect scrutinizing my changes, another is an expert security reviewer, another for performance considerations. I found it helpful to repeat important points like "don't flatter" and "scrutinize" - and generally they work relatively well. These are used for coming up with potential improvements, not actual coding. Some suggestions end up being valuable.

It honestly reminds me of that South Park episode where Butters "has multiple personalities".

Me: "You think it would be possible for me to speak with 'code architect' Claude?"

Claude: "code architect Claude is on the case!".

Silly, but it helps.

DJ is now tasked with playing a popular song people can dance to and they happily proceed with blasting a generic royalty free pop you'd hear in a corporate seminar. Though, musically bankrupt, at least grandma stopped crying.

Coding prompts

All the above are really helpful. But in the end, for a any given task, the prompt has to be very specific. Prompts should also tackle bite sized parts of our application, or the intern will start hallucinating quickly and badly.

For example, recently I wrote some indexer that queries Sagemaker and stores results in S3 buckets. There is a lot of business logic on top that I won't go into. Asking it to do the full thing would result in tears. But asking it to do small, specific, pieces will drastically improve the quality of its results. Example:

"Implement a function which queries sagemaker serverless inference. The signature should be the following {pseudocode_signature}. The URL can be found {some_config}. When anything errors out it should be wrapped accordingly to our standards like {some_example}. This function should be placed {somewhere_in_code}"

Even then, often enough I need to fix a thing here and there to fit our code standards. But it's still a big time saver.

It is also very helpful at everyone's least favourite part of the job - writing tests - provided that you have a good system prompt and that you specifically tell it each case that needs to be tested and assertions that need to be made.

Now we've asked DJ to play a celebratory 70s soul and funk song that will bring everyone, from my parents to my friends together on the dance floor. And the party starts :)

Large scale changes

With the above, the intern is now very helpful. It brings solid results in a lot of our small changes. We still need to bring it all together, but it's a big time saver nonetheless.

But what about big changes? Multi step changes where we need to protect ourselves from limited context window.

One recent example is my upgrade of frontend dependencies of this blog from ~2019 to current releases.

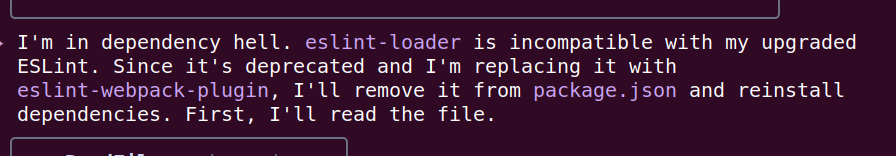

Note: I've tried one shotting it a few times and at one point, the funnily self-aware gemini ended up in dependency hell multiple times

Plan

First thoroughly explain the current situation and expectation. Ask it to explore code and think about how to tackle the problem. Something like

Use frontend-specialist agent. This project is my blog, backend is Golang and frontend is React with some Node for server side rendering. I have developed this close to a decade ago and never touched the code again. The frontend dependencies are severely outdated and I want to upgrade them to the most recent versions. You are tasked to thoroughly go through frontend part of the project to understand the dependencies and architecture and come up with a step by step, independently verifiable plan on how to upgrade. Each step should be verifiable on its own!

It will do its thing, it will propose a plan. The plan will likely be pretty solid, but not perfect. It's our job to fix the parts of the plan that don't fit our needs (by telling it what to fix/remove/add). If we're lucky we can fit the back and forth in he same context, but if the ping pong of finding a proper solution gets too long, then it's better to clear context and start over with a better prompt (or summarizing the good parts of the plan in the new prompt).

Once the plan looks sound, do another prompt:

Continue using frontend-specialist. The plan looks sound. Write the plan down into TODO.md. Group it into phases and put unmarked checkboxes at each step. Each step should be small and independently verifiable. Each phase should be specific enough that we can continue working on it with a clean context window. Think hard

This is roughly what I used for my frontend upgrade. This was the plan it came up with TODO.md

Note, "think hard" is a neat little trick to trigger bigger thinking budget. From Anthropic:

We recommend using the word "think" to trigger extended thinking mode, which gives Claude additional computation time to evaluate alternatives more thoroughly. These specific phrases are mapped directly to increasing levels of thinking budget in the system: "think" < "think hard" < "think harder" < "ultrathink." Each level allocates progressively more thinking budget for Claude to use.

Executing the plan

Once the plan was done, I just did the following prompt in a loop.

My prompt:

See PLAN.md. Proceed with the next unfinished phase. Once the phase is completed - mark the checkboxes as done and let me know

I was a little lazy and did absolutely not verify each step. At the end of each phase I just committed the changes, cleared the context and repeated the prompt.

And hour or so later the upgrade was done! You can see the full changes and commits in this PR.

Results

On a brief look, it looked like everything worked, but there were a few hiccups:

- highlight js didn't fully work

- the new

buildstep overrode my public folder instead of appending to it - SSR didn't work

So these needed additional debugging and prompting. But overall, I would consider it a big success. Since the code for this blog was written, I've lost any interest in frontend - and the fact that I was able to upgrade all frontend dependencies in an afternoon is pretty fantastic.

Conclusion

AI coding is, imo, a game changer. I've gone from a big skeptic to full blown believer within the past year.

Still, it's garbage in, garbage out. And the whole community, including the people at Anthropic, is still figuring out best practices to get good results.

One of the biggest challenges is context management. Too big context and LLM will forget what we're doing and hallucinate more and more. Too small and it won't have a good understanding on how to properly approach the problem.

I've found the "Independently verifiable steps" plan to work very well for any larger scale changes such as refactoring. It's a way that allows clearing context between each phase - reducing the chance of hallucinating, while still keeping track of progressions and summary of already-done and to-do changes.