Updating Elasticsearch aliases is sometimes atomic

Updating ES aliases is atomic within a request, but when sending multiple alias changes at the same time, the actual changes are applied with some sort of internal queue.

Background

I sometimes work on an ES-based search application. Managing indices is hard and making use of index aliases allows us to do reindexing without interrupting the application actually performing the query.

Reindexing is necessary whenever templates change. This can be due to changes in synonyms or stopwords, changes in fields and their analyzers, changes to sharding strategies. Or any number of other reasons, depending on the specific application set up.

My requirements

The application I'm working on is specifically for search. And with the setup I'm running there are two specific requirement:

- A search request needs to always search exactly 1 index

- An alias always needs to point exactly to 1 index

- Process that started reindexing can fail and another process should pick it up

- If the pod that started reindexing dies, another pod should first check whether reindex task is already running -> and continue from there

- Multiple processes can start the reindexing job at the same time

- It can happen that multiple processes monitor the same reindex task and subsequently execute same alias updates at the same time once the reindexing is completed

3rd point lands us in a bit of a pickle. As it happens, if multiple requests updating aliases are executed at the same time, Elasticsearch will duplicate the aliases

Setup

For the purpose of this post I have an Elasticsearch cluster running on localhost:9200:

docker run -p 9200:9200 -e "discovery.type=single-node" -e "xpack.security.enabled=false" elasticsearch:8.18.0I have also set up two empty indices: "test_index" and "test_index2" (ES docs):

defmodule ESAliases.IndexManagement.CreateIndices do

def run(index_name) do

req = Req.new(

url: "http://localhost:9200/" <> index_name,

headers: %{"content-type": "application/json"},

json: %{settings: %{number_of_replicas: 0}}

)

Req.put!(req)

end

end

ESAliases.IndexManagement.CreateIndices.run("test_index")

ESAliases.IndexManagement.CreateIndices.run("test_index2")

A single alias change request is always atomic

To test this, let's first run a successful request which applies two aliases to the index (ES docs):

defmodule ESAliases.IndexManagement.ApplyTwoAliases do

def run() do

req = Req.new(

url: "http://localhost:9200/_aliases",

headers: %{"content-type": "application/json"},

json: %{

actions: [

%{add: %{index: "test_index", alias: "alias_1"}},

%{add: %{index: "test_index", alias: "alias_2"}}

]

}

)

Req.post!(req)

end

end

ESAliases.IndexManagement.ApplyTwoAliases.run()

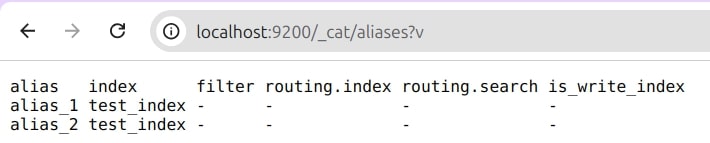

As we can see, the aliases were successfully created:

Now let's test atomicity. Let's instead create a request that should successfully apply 1 part, but would fail on the other:

defmodule ESAliases.IndexManagement.FailTwoAliases do

def run() do

req = Req.new(

url: "http://localhost:9200/_aliases",

headers: %{"content-type": "application/json"},

json: %{

actions: [

%{add: %{index: "test_index", alias: "alias_3"}},

%{add: %{index: "index_doesnt_exist", alias: "alias_4"}}

]

}

)

IO.inspect(Req.post!(req))

end

end

ESAliases.IndexManagement.FailTwoAliases.run()

In this case the first part should succeed on its own, as we're setting alias_3 to existing index test_index. But the second part will fail as we're setting alias_4 to an index that does not exist.

And so it does fail with the status 404 and the reason no such index [index_doesnt_exist]

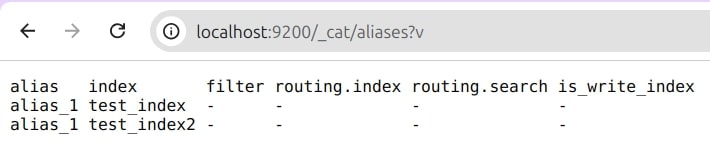

Upon checking aliases, we see that nothing changed

This is good! We see that if any of the actions fail, the whole request fails. Great!

Concurrent alias-change requests

However. Things are a little more complicated when you execute concurrent requests.

As it turns out, it looks like Elasticsearch will create an internal queue of alias changes to execute. This queue does not necessarily align with the order of changes we provide through API requests.

In the application the alias change requests look something like this:

- Remove alias

alias_1from all indices - Add alias

alias_1to the new index

This works fine within a single request, as single request works atomically and it essentially swaps the alias from old index to the new index.

As mentioned earlier, in some unlucky cases it can happen that multiple processes execute the alias request at the same time. In the very unluckiest of cases the two processes will set alias to different indices.

Note: this would happen if process1 starts reindexing, but template changes in the meantime so process2 starts reindexing again to a whole new index. They then both finish at the same time and try to set alias to the index they created.

Let's recreate this scenario with some simple concurrent code:

defmodule ESAliases.IndexManagement.AliasConcurrent do

def run(index_name) do

req = Req.new(

url: "http://localhost:9200/_aliases",

headers: %{"content-type": "application/json"},

json: %{

actions: [

%{remove: %{index: "*", alias: "alias_1"}},

%{add: %{index: index_name, alias: "alias_1"}},

]

}

)

IO.inspect(Req.post!(req))

end

end

tasks = []

tasks = [Task.async(fn -> ESAliases.IndexManagement.AliasConcurrent.run("test_index") end) | tasks]

tasks = [Task.async(fn -> ESAliases.IndexManagement.AliasConcurrent.run("test_index2") end) | tasks]

Task.await_many(tasks)

In the code above we run 2 tasks concurrently. Each attempts to remove alias alias_1 from all indices and then add alias_1 to an index (first task to index "test_index" and second task to "test_index2").

Intuitively, I'd expect that one of them gets fully executed (removes alias -> then adds) and then the other gets fully executed (removes alias -> then adds) and we would inevitably end up with the alias pointing to exactly 1 index.

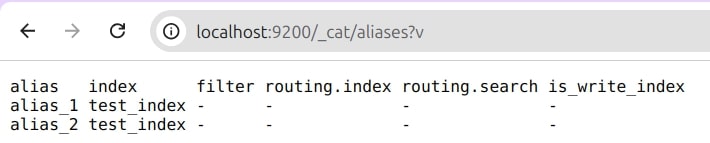

But that is not the case. After executing the above code and checking our aliases, we see the following:

What happened is that both aliases got added. I don't have a good explanation why or what exactly happens in Elasticsearch behind the scenes. But it looks like it internally queued up alias removal ahead of alias additions. Essentially executing our changes in the following order:

- Remove "alias_1" from all indices

- Remove "alias_1" from all indcies

- Add "alias_1" to "test_index"

- Add "alias_1" to "test_index2"

Very unfortunate.

Solution (or abusing is_write_index)

Note: This is a solution that works for the application I'm working on, due to the fact that each alias must always point to exactly 1 index.

When adding Elasticsearch aliases you have the ability to set is_write_index: true. This is useful for things like logging, where you might want to have indices based on date -> the logs would read from multiple indices (past dates as well as current), but you would always want to write to the latest index. So, one way to achieve this is, you would set an alias to multiple indices, but only set is_write_index: true to the latest index.

But the setting has another property: an alias can only have one index set as write index. In case you attempt to set it to multiple indices, the request will fail. And as we've seen earlier, a single alias update request is atomic on its own - so the whole request will fail.

As such, a solution that works for my specific usecase is: always set is_write_index: true.

Let's see what happens then. Let's add is_write_index: true to the "add" part of alias request:

defmodule ESAliases.IndexManagement.AliasConcurrentWriteIndex do

def run(index_name) do

req = Req.new(

url: "http://localhost:9200/_aliases",

headers: %{"content-type": "application/json"},

json: %{

actions: [

%{remove: %{index: "*", alias: "alias_1"}},

%{add: %{index: index_name, alias: "alias_1", is_write_index: true}},

]

}

)

IO.inspect(Req.post!(req))

end

end

tasks = []

tasks = [Task.async(fn -> ESAliases.IndexManagement.AliasConcurrentWriteIndex.run("test_index") end) | tasks]

tasks = [Task.async(fn -> ESAliases.IndexManagement.AliasConcurrentWriteIndex.run("test_index2") end) | tasks]

Task.await_many(tasks)When I now execute the above command, I see the following two responses:

- Status: 200;

"acknowledged" => true - Status: 500;

"reason" => "alias [alias_1] has more than one write index [test_index2,test_index]"

Great! We see that one of the requests failed as it was trying to add is_write_alias to an index, when the alias is already pointing to another index (with the same flag).

This is undoubtedly a little hacky and misusing the flag. But it works! It is a way to enforce that a single alias will always point to a single index.

Note that we have no power over which of the two indices the alias will point to. Within the application I'm working on this is not a big deal (as opposed to having it point to two indices and getting duplicated search results) as eventual consistency is fine - meaning the subsequent processes will make sure the templates are up to date.

Conclusion

Elasticsearch has it's fair share of quirks. Sometimes it's easier to find a hacky solution than rewrite large parts of existing application.

Setting is_write_alias whenever adding an alias can serve as a simple way of enforcing that the alias will point to exactly one index.

Code can be found here: https://github.com/d1am0nd/elasticsearch-aliases